Derek Bradley, Ph.D.

Director, Research & Development

DisneyResearch|Studios

PhD, University of British Columbia, 2010

MCS, Carleton University, 2005

BCS, Carleton University, 2003

derek.bradley (at) disneyresearch.com

I am a Director of Research & Development at DisneyResearch|Studios in Zurich, Switzerland, where I also lead the Digital Humans research team. I am interested in various problems that involve creating digital humans, including digital scanning, facial performance capture and animation, performance retargeting, neural face models, and visual effects for films.

Publications

Learning a Generalized Physical Face Model From Data

L. Yang, G. Zoss, P. Chandran, M. Gross, B. Solenthaler, E. Sifakis, D. Bradley

arXiv pre-print. 2024. (Accepted to SIGGRAPH 2024)

we aim to make physics-based facial animation more accessible by proposing a generalized physical face model that we learn from a large 3D face dataset in a simulation-free manner. Once trained, our model can be quickly fit to any unseen identity and produce a ready-to-animate physical face model automatically.

[arXiv]

Artist-Friendly Relightable and Animatable Neural Heads

Y. Xu, P. Chandran, S. Weiss, M. Gross, G. Zoss, D. Bradley

arXiv pre-print. 2023. (Accepted to CVPR 2024)

We propose a new method for relightable and animatable neural heads, building on a mixture of volumetric primitives, combined with a lightweight hardware setup, including a novel architecture that allows relighting dynamic neural avatars performing unseen expressions in any environment, even with nearfield illumination and viewpoints.

[arXiv]

CADS: Unleashing the Diversity of Diffusion Models through Condition-Annealed Sampling

S. Sadat, J. Buhmann, D. Bradley, O. Hilliges, R. Weber

arXiv pre-print. 2023. (Accepted to ICLR 2024 as Spotlight)

We offer a new sampling strategy for conditional diffusion models that can increase generation diversity, especially at high guidance scales, with minimal loss of sample quality.

[arXiv]

An Implicit Physical Face Model Driven by Expression and Style

L. Yang, G. Zoss, P. Chandran, P. Gotardo, M. Gross, B. Solenthaler, E. Sifakis, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2023. (Sydney, Australia)

We propose a new face model based on a data-driven implicit neural physics model that can be driven by both expression and style separately.

[Project Page]

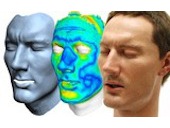

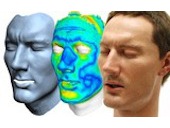

A Perceptual Shape Loss for Monocular 3D Face Reconstruction

C. Otto, P. Chandran, G. Zoss, M. Gross, P. Gotardo, D. Bradley

Computer Graphics Forum (Proceedings of Pacific Graphics). 2023. (Daejeon, Korea)

We propose a new loss function for monocular face capture, inspired by how humans would perceive the quality of a 3D face reconstruction given a particular image, eg. by judging the quality of a 3D face estimate using only shading cues.

[Project Page]

ReNeRF: Relightable Neural Radiance Fields with Nearfield Lighting

Y. Xu, G. Zoss, P. Chandran, M. Gross, D. Bradley, P. Gotardo

International Conference on Computer Vision (ICCV). 2023. (Paris, France)

We present the first method for high quality relightable neural radiance fields with the ability to handle nearfield lighting, environment map rendering, and novel view interpolation, all without requiring a dense light stage.

[Project Page]

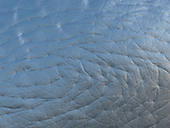

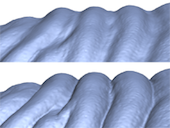

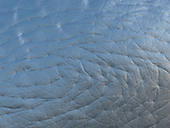

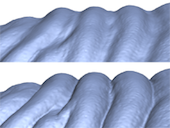

Graph-Based Synthesis for Skin Micro Wrinkles

S. Weiss, J. Moulin, P. Chandran, G. Zoss, P. Gotardo, D. Bradley

Computer Graphics Forum (Proceedings of Eurographics Symposium on Geometry Processing). 2023. (Genova, Italy).

We present a novel graph-based simulation approach for generating micro wrinkle geometry on human skin, which can easily scale up to the micro-meter range and millions of wrinkles.

[Project Page]

Continuous Landmark Detection with 3D Queries

P. Chandran, G. Zoss, P. Gotardo, D. Bradley

IEEE Computer Vision and Pattern Recognition (CVPR). 2023. (Vancouver, Canada)

We propose the first facial landmark detection network that can predict continuous, unlimited landmarks, allowing to specify the number and location of the desired landmarks at inference time.

[Project Page]

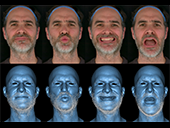

Production-Ready Face Re-Aging for Visual Effects

G. Zoss, P. Chandran, E. Sifakis, M. Gross, P. Gotardo, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2022. (Daegu, Korea)

We present the first practical, fully-automatic and production-ready method for re-aging faces in video images. Our new face re-aging network (FRAN) incorporates simple and intuitive artistic control and creative freedom to direct and fine-tune the re-aging effect.

[Project Page]

Learning Dynamic 3D Geometry and Texture for Video Face Swapping

C. Otto, J. Naruniec, L. Helminger, T. Etterlin, G. Mignone, P. Chandran, G. Zoss, C. Schroers, M. Gross, P. Gotardo, D. Bradley, R. Weber

Computer Graphics Forum (Proceedings of Pacific Graphics). 2022. (Kyoto, Japan)

We present a new method to swap the face of a target video performance with a new source identity. Our method learns dynamic

3D geometry and texture to obtain more realistic face swaps with better artistic control than common 2D methods.

[Project Page]

Facial Animation with Disentangled Identity, Motion using Transformers

P. Chandran, G. Zoss, M. Gross, P. Gotardo, D. Bradley

Eurographics Symposium on Computer Animation. 2022. (Durham, UK)

We propose a 3D+time framework for modeling dynamic sequences of 3D facial shapes that represents realistic non-rigid motion during a performance, using a transformer-based auto-encoder. Our approach has applications in performance synthesis, retargeting, interpolation, completion, denoising, and retiming.

[Project Page]

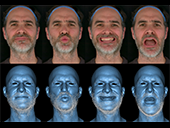

Facial Hair Tracking for High Fidelity Performance Capture

S. Winberg, G. Zoss, P. Chandran, P. Gotardo, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2022. (Vancouver, Canada)

Facial hair is a largely overlooked problem in facial performance capture, requiring actors to shave clean before a capture session. We propose the first method that can reconstruct and track 3D facial hair fibers and approximate the underlying skin during dynamic facial performances.

[Project Page]

Local Anatomically Constrained Facial Performance Retargeting

P. Chandran, L. Ciccone, M. Gross, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2022. (Vancouver, Canada)

We propose a novel anatomically constrained local facial animation retargeting technique that produces high-quality results for production pipelines while requiring fewer input shapes than standard techniques.

[Project Page]

MoRF: Morphable Radiance Fields for Multiview Neural Head Modeling

D. Wang, P. Chandran, G. Zoss, D. Bradley, P. Gotardo

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2022. (Vancouver, Canada)

Neural radiance fields (NeRFs) have provided photorealistic renderings of human heads, but they are so far limited to a single identity. We propose a new morphable radiance field (MoRF) method that extends a NeRF into a generative model that synthesizes human head images with variable identity and high photorealism.

[Project Page]

ABBA Voyage: High Volume Facial Likeness and Performance Pipeline

J. Plaete, D. Bradley, P. Warner, A. Zwartouw

ACM SIGGRAPH Talks. 2022. (Vancouver, Canada)

For the ABBA: Voyage concert experience, Industrial Light & Magic (ILM) was tasked with digitally time traveling the iconic band′s members Agnetha, Anni-Frida, Björn and Benny back to their prime time appearances. Here we dive into the extensive research and development needed to generate four continuous photo-real digital human facial performances.

[ACM Page]

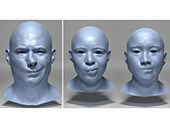

Shape Transformers: Topology-Independent 3D Shape Models Using Transformers

P. Chandran, G. Zoss, M. Gross, P. Gotardo, D. Bradley

Computer Graphics Forum (Proceedings of Eurographics). 2022. (Reims, France).

We present Shape Transformers, a new nonlinear parametric 3D shape model based on transformer architectures that can be trained on a mixture of 3D datasets of different topologies and spatial resolutions.

[Project Page]

Improved Lighting Models for Facial Appearance Capture

Y. Xu, J. Riviere, G. Zoss, P. Chandran, D. Bradley, P. Gotardo

Eurographics Short Papers. 2022. (Reims, France)

We demonstrate the impact on facial appearance capture quality when departing from idealized lighting conditions towards models that seek to more accurately represent the lighting, while at the same time minimally increasing computational burden.

[Project Page]

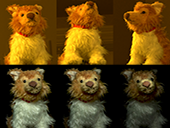

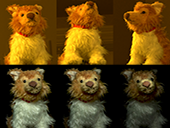

Rendering with Style: Combining Traditional and Neural Approaches for High-Quality Face Rendering

P. Chandran*, S. Winberg*, G. Zoss, J. Riviere, M. Gross, P. Gotardo, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2021. (Tokyo, Japan)

We propose a method to combine incomplete high-quality facial skin renders with generative neural face rendering in order to achieve photorealistic full head renders automatically.

[Project Page]

Adaptive Convolutions for Structure-Aware Style Transfer

P. Chandran, G. Zoss, P. Gotardo, M. Gross, D. Bradley

IEEE Computer Vision and Pattern Recognition (CVPR) 2021. (Virtual)

We propose Adaptive Convolutions (AdaConv), a generic extension of Adaptive Instance Normalization, to allow for the simultaneous transfer of both statistical and structural styles during style transfer between images.

[Project Page]

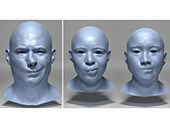

Semantic Deep Face Models

P. Chandran, D. Bradley, M. Gross, T. Beeler

International Conference on 3D Vision (oral). 2020. (Fukuoka, Japan)

We propose semantic deep face models - novel neural architectures for modelling and synthesising 3D human faces with the ability to disentangle identity and expression akin to traditional multi-linear models, but with a non-linear framework.

[Project Page]

Single-Shot High-Quality Facial Geometry and Skin Appearance Capture

J. Riviere, P. Gotardo, D. Bradley, A. Ghosh, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2020. (Washington, USA)

We propose a polarization-based, view-multiplexed system to capture high-quality facial geometry and skin appearance from a single exposure. The method readily extends widespread passive photogrammetry, also enabling dynamic performance capture.

[Project Page]

Data-Driven Extraction and Composition of Secondary Dynamics in Facial Performance Capture

G. Zoss, E. Sifakis, M. Gross, T. Beeler, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2020. (Washington, USA)

We propose a data-driven method for both the removal of parasitic dynamic motion and the synthesis of desired secondary dynamics in performance capture sequences of facial animation.

[Project Page]

ETH-XGaze: A Large Scale Dataset for Gaze Estimation under Extreme Head Pose & Gaze Variation

X. Zhang, S. Park, T. Beeler, D. Bradley, S. Tang, O. Hilliges

European Conference on Computer Vision. 2020. (Glasgow, Scotland)

We propose a new gaze estimation dataset called ETH-XGaze, consisting of over one million high-resolution images of varying gaze under extreme head poses, which can significantly improve the robustness of gaze estimation methods across different head poses and gaze angles.

[Project Page]

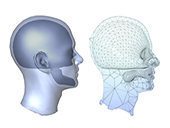

Interactive Sculpting of Digital Faces Using an Anatomical Modeling Paradigm

A. Gruber, M. Fratarcangeli, G. Zoss, R. Cattaneo, T. Beeler, M. Gross, D. Bradley

Computer Graphics Forum (Proceedings of Eurographics Symposium on Geometry Processing). 2020. (Utrecht, The Netherlands).

We propose a novel interactive method for the creation of digital faces that is simple and intuitive to use, even for novice users, while consistently producing plausible 3D face geometry, and allowing editing freedom beyond traditional video game avatar creation.

[Project Page]

Attention-Driven Cropping for Very High Resolution Facial Landmark Detection

P. Chandran, D. Bradley, M. Gross, T. Beeler

IEEE Computer Vision and Pattern Recognition (CVPR) 2020. (Seattle, USA).

Building on recent progress in attention-based networks, we present a novel, fully convolutional regional architecture that is specially designed for predicting landmarks on very high resolution facial images without downsampling.

[Project Page]

Fast Nonlinear Least Squares Optimization of Large-Scale Semi-Sparse Problems

M. Fratarcangeli, D. Bradley, A. Gruber, G. Zoss, T. Beeler

Computer Graphics Forum (Proceedings of Eurographics). 2020. (Norrköping, Sweden)

We introduce a novel iterative solver for nonlinear least squares optimization of large-scale semi-sparse problems, allowing to efficiently solve several complex computer graphics problems on the GPU.

[Project Page]

Facial Expression Synthesis using a Global-Local Multilinear Framework

M. Wang, D. Bradley, S. Zafeiriou, T. Beeler

Computer Graphics Forum (Proceedings of Eurographics). 2020. (Norrköping, Sweden)

We introduce global-local multilinear face models, leveraging the strengths of expression and identity specific local models combined with coarse deformation from a global model, and show plausible 3D facial expression synthesis results.

[Project Page]

Accurate Real-time 3D Gaze Tracking Using a Lightweight Eyeball Calibration

Q. Wen, D. Bradley, T. Beeler, S. Park, O. Hilliges, J-H. Yong, F. Xu

Computer Graphics Forum (Proceedings of Eurographics). 2020. (Norrköping, Sweden)

We propose a novel model-fitting based approach for 3D gaze tracking that leverages a lightweight calibration scheme in order to track the 3D gaze of users in realtime and with relatively high-accuracy.

[Project Page]

Data-Driven Physical Face Inversion

Y. Kozlov, H. Xu, M. Bächer, D. Bradley, M. Gross, T. Beeler

arXiv:1907.10402

We propose to use a simple finite element simulation approach for face animation, and present a novel method for recovering the required simulation parameters in order to best match a real actor's face motion.

[arXiv Page]

Accurate Markerless Jaw Tracking for Facial Performance Capture

G. Zoss, T. Beeler, M. Gross, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2019. (Los Angeles, USA)

We present the first method to accurately track the invisible jaw based solely on the visible skin surface, without the need for any markers or augmentation of the actor.

[Project Page]

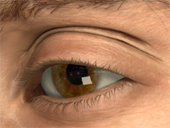

Practical Person-Specific Eye Rigging

P. Bérard, D. Bradley, M. Gross, T. Beeler

Computer Graphics Forum (Proceedings of Eurographics). 2019. (Genova, Italy)

We present a novel parametric eye rig for eye animation, including a new multi-view imaging system that can reconstruct eye poses at submillimeter accuracy to which we fit our new rig.

[Project Page]

Practical Dynamic Facial Appearance Modeling and Acquisition

P. Gotardo, J. Riviere, D. Bradley, A. Ghosh, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2018. (Tokyo, Japan)

We present a method to acquire dynamic properties of facial skin appearance, including dynamic diffuse albedo encoding blood flow, dynamic specular intensity, and per-frame high-resolution normal maps for a facial performance sequence.

[Project Page]

Appearance Capture and Modeling of Human Teeth

Z. Velinov, M. Papas, D. Bradley, P. Gotardo, P. Mirdehghan, S. Marschner, J. Novak, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2018. (Tokyo, Japan)

We present a system specifically designed for capturing the optical properties of live human teeth such that they can be realistically re-rendered in computer graphics application like visual effects for films or computer games.

[Project Page]

An Empirical Rig for Jaw Animation

G. Zoss, D. Bradley, P. Bérard, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2018. (Vancouver, Canada)

We propose a novel jaw rig, empirically designed from captured data, that provides more accurate jaw positioning and constrains the motion to physiologically possible poses while offering intuitive control.

[Project Page]

User-Guided Lip Correction for Facial Performance Capture

D. Dinev, T. Beeler, D. Bradley, M. Bächer, H. Xu, and L. Kavan

Eurographics Symposium on Computer Animation. 2018. (Paris, France)

We present a user-guided method for correcting lips in high-quality facial performance capture, targeting systematic errors in reconstruction due to the complex appearance and occlusion/dis-occlusion of the lips.

[Project Page]

State of the Art on Monocular 3D Face Reconstruction, Tracking, and Applications

M. Zollhöfer, J. Thies, P. Garrido, D. Bradley, T. Beeler, P. Perez, M. Nießner, M. Stamminger, C. Theobalt

Computer Graphics Forum (Proceedings of Eurographics - State of the Art Reports). 2018. (Delft, The Netherlands).

We provide an in-depth overview of recent techniques for monocular 3D face reconstruction, including image formation, common assumptions and simplifications, priors, optimization techniques, and use cases in the context of motion capture, facial animation, and image/video editing.

[Paper]

Enriching Facial Blendshape Rigs with Physical Simulation

Y. Kozlov, D. Bradley, M. Bächer, B. Thomaszewski, T. Beeler, M. Gross

Computer Graphics Forum (Proceedings of Eurographics). 2017. (Lyon, France)

A simple and intuitive system that allows to add physics to facial blendshape animation. Our novel simulation framework uses the original animation as per-frame rest-poses without adding spurious forces. We also propose the concept of blendmaterials to give artists an intuitive means to account for changing material properties due to muscle activation.

[Project Page]

Simulation-Ready Hair Capture

L. Hu, D. Bradley, H. Li, T. Beeler

Computer Graphics Forum (Proceedings of Eurographics). 2017. (Lyon, France)

We present the first method for capturing dynamic hair and automatically determining the physical properties for simulating the observed hairstyle in motion. Our dynamic inversion is agnostic to the simulation model, and hence the proposed method applies to virtually any hair simulation technique.

[Project Page]

Real-Time Multi-View Facial Tracking with Synthetic Training

M. Klaudiny, S. McDonagh, D. Bradley, T. Beeler, K. Mitchell

Computer Graphics Forum (Proceedings of Eurographics). 2017. (Lyon, France)

We present a real-time multi-view facial capture system facilitated by synthetic training imagery. Our method is able to achieve high-quality markerless facial performance capture in real-time from multi-view helmet camera data, employing an actor specific regressor.

[Project Page]

Model-Based Teeth Reconstruction

C. Wu, D. Bradley, P. Garrido, M. Zollhöfer, C. Theobalt, M. Gross, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2016. (Macao)

We present the first approach for non-invasive reconstruction of an entire person-specific tooth row from just a sparse set of photographs of the mouth region. The basis of our approach is a new parametric tooth row prior learned from high quality dental scans.

[Project Page]

Corrective 3D Reconstruction of Lips from Monocular Video

P. Garrido, M. Zollhöfer, C. Wu, D. Bradley, P. Pérez, T. Beeler, C. Theobalt

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2016. (Macao)

We present a method to reconstruct detailed and expressive lip shapes from monocular RGB video. To this end, we learn the difference between inaccurate lip shapes found by a monocular facial capture method, and the true 3D lip shapes reconstructed using a high-quality multi-view system, and finally infer accurate lip shapes in a regression framework.

[Project Page]

Synthetic Prior Design for Real-Time Face Tracking

S. McDonagh, M. Klaudiny, D. Bradley, T. Beeler, K. Mitchell, I. Matthews

Int. Conference on 3D Vision. 2016. (Stanford, USA)

We propose to learn a synthetic actor-specific prior for real-time facial tracking. We construct better and smaller training sets by investigating which facial image appearances are crucial for tracking accuracy, covering the dimensions of expression, viewpoint and illumination.

[Project Page]

Lightweight Eye Capture Using a Parametric Model

P. Bérard, D. Bradley, M. Gross, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2016. (Anaheim, USA)

We present the first approach for high-quality lightweight eye capture, which leverages a database of pre-captured eyes to guide the reconstruction of new eyes from much less constrained inputs, such as traditional single-shot face scanners or even a single photo from the internet.

[Project Page]

An Anatomically Constrained Local Deformation Model for Monocular Face Capture

C. Wu, D. Bradley, M. Gross, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2016. (Anaheim, USA)

We present a new anatomically-constrained local face model and fitting approach for tracking 3D faces from 2D motion data in very high quality. In contrast to traditional global models, we propose a local deformation model composed of many small subspaces spatially distributed over the face, and introduce anatomical subspace skin thickness constraints.

[Project Page]

FaceDirector: Continuous Control of Facial Performance in Video

C. Malleson, J.-C. Bazin, O. Wang, D. Bradley, T. Beeler, A. Hilton, A. Sorkine-Hornung

International Conference on Computer Vision (ICCV). 2015. (Santiago, Chile)

We present a method to continuously blend between multiple facial performances of an actor, which can contain different expressions or emotional states. As an example, given sad and angry video takes of a scene, our method empowers a movie director to specify arbitrary weighted combinations and smooth transitions between the two takes in post-production.

[Project Page]

Detailed Spatio-Temporal Reconstruction of Eyelids

A. Bermano, T. Beeler, Y. Kozlov, D. Bradley, B. Bickel, M. Gross

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2015. (Los Angeles, USA)

In recent years we have seen numerous improvements on 3D scanning and tracking of human faces. However, current methods are unable to capture one of the most important regions of the face - the eye region. In this work we present the first method for detailed spatio-temporal reconstruction of eyelids.

[Project Page]

Real-Time High-Fidelity Facial Performance Capture

C. Cao, D. Bradley, K. Zhou, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2015. (Los Angeles, USA)

We present a real-time facial capture method that requires only a single video camera and no user-specific training, yet is able to capture the face with very high fidelity, including individual expression wrinkles.

[Project Page]

Recent Advances in Facial Appearance Capture

O. Klehm, F. Rousselle, M. Papas, D. Bradley, C. Hery, B.Bickel, W. Jarosz, T. Beeler

Computer Graphics Forum (Proceedings of Eurographics - State of the Art Reports). 2015. (Zurich, Switzerland).

Facial appearance capture is firmly established within academic research and used extensively across various application domains. This report provides an overview that can guide practitioners and researchers in assessing the tradeoffs between current approaches and identifying directions for future advances in facial appearance capture.

[Project Page]

High-Quality Capture of Eyes

P. Bérard, D. Bradley, M. Nitti, T. Beeler, M. Gross

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2014. (Shenzhen, China).

We propose a novel capture system that is capable of accurately reconstructing all the visible parts of the eye: the white sclera, the transparent cornea and the non-rigidly deforming colored iris.

[Project Page]

Capturing and Stylizing Hair for 3D Fabrication

J. I. Echevarria, D. Bradley, D. Gutierrez, T. Beeler

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2014. (Vancouver, Canada).

In this paper we present the first method for *stylized* hair capture, a technique to reconstruct an individual’s actual hair-style in a manner suitable for physical reproduction.

[Project Page]

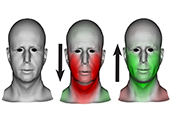

Rigid Stabilization of Facial Expressions

T. Beeler, D. Bradley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2014. (Vancouver, Canada).

When scanning facial expressions to build a digital double, the resulting scans often contain both the non-rigid expression and un-wanted rigid transformations. We present the first method to automatically stabilize the shapes by removing the rigid transformations.

[Project Page]

Facial Performance Enhancement Using Dynamic Shape Space Analysis

A. H. Bermano, D. Bradley, T. Beeler, F. Zünd, D. Nowrouzezahrai, I. Baran, O. Sorkine, H. Pfister, R. W. Sumner, B. Bickel, M. Gross

ACM Transactions on Graphics. 2014.

We present a technique for adding fine-scale details and expressiveness to low-resolution art-directed facial performances, such as those created manually using a rig, via marker-based capture, or by fitting a morphable model to a video.

[Project Page]

Local Signal Equalization for Correspondence Matching

D. Bradley, T. Beeler

International Conference on Computer Vision (ICCV). 2013. (Sydney, Australia).

In this paper we propose a local signal equalization approach for correspondence matching, which handles the situation when the input images contain different frequency signals, for example caused by different amounts of defocus blur.

[Project Page]

Modeling and Estimation of Internal Friction in Cloth

E. Miguel, R. Tamstorf, D. Bradley, S. C. Schvartzman, B. Thomaszewski, B. Bickel, W. Matusik, S. Marschner, M. A. Otaduy

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2013. (Hong Kong).

We propose a model of internal friction for cloth, and show that this model provides a good match to important features of cloth hysteresis�. We also present novel parameter estimation procedures based on simple and inexpensive setups�.

[Project Page]

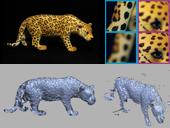

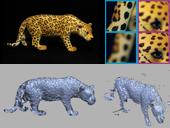

Image-based Reconstruction and Synthesis of Dense Foliage

D. Bradley, D. Nowrouzezahrai, P. Beardsley

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2013. (Anaheim, USA).

A method for reconstructing and synthesizing dense foliage from images. Starting with point-cloud data from multi-view stereo, the method iteratively fits an exemplar leaf to the points. A statistical model of leaf shape and appearance is used to synthesize new leaves of the captured species.

[Project Page]

Improved Reconstruction of Deforming Surfaces by Cancelling Ambient Occlusion

T. Beeler, D. Bradley, H. Zimmer, M. Gross

European Conference on Computer Vision. 2012. (Florence, Italy).

We present a general technique for improving space-time reconstructions of deforming surfaces, by factoring out surface shading computed by a fast approximation to global illumination called ambient occlusion. Our approach simultaneously improves both the acquired shape as well as the tracked motion of the deforming surface.

[Project Page]

Physical Face Cloning

B. Bickel, P. Kaufmann, M. Scouras, B. Thomaszewski, D. Bradley, T. Beeler, P. Jackson, S. Marschner, W. Matusik, M. Gross

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2012. (Los Angeles, USA).

We propose a complete process for designing, simulating, and fabricating synthetic skin for an animatronics character that mimics the face of a given subject and its expressions.

[Project Page]

Data-Driven Estimation of Cloth Simulation Models

E. Miguel, D. Bradley, B. Thomaszewski, B. Bickel, W. Matusik, M. A. Otaduy, S. Marschner

Proceedings of Eurographics. 2012. (Cagliari, Italy).

This paper provides measurement and fitting methods that allow nonlinear cloth models to be fit to observed deformations of a particular cloth sample.

[Project Page]

Manufacturing Layered Attenuators for Multiple Prescribed Shadow Images

I. Baran, P. Keller, D. Bradley, S. Coros, W. Jarosz, D. Nowrouzezahrai, M. Gross

Proceedings of Eurographics. 2012. (Cagliari, Italy).

We present a practical and inexpensive method for creating physical objects that cast different color shadow images when illuminated by prescribed lighting configurations.

[Project Page]

High-Quality Passive Facial Performance Capture Using Anchor Frames

T. Beeler, F. Hahn, D. Bradley, B. Bickel, P. Beardsley, C. Gotsman, R. W. Sumner, M. Gross

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2011. (Vancouver, Canada).

We present a new technique for markerless facial performance capture based on anchor frames, which starts with high resolution geometry acquisition and proceeds to propagate a single triangle mesh through an entire performance.

[Project Page]

Globally Consistent Space Time Reconstruction

T. Popa, I. South-Dickinson, D. Bradley, A. Sheffer, W. Heidrich

Computer Graphics Forum (Proceedings of Eurographics Symposium on Geometry Processing). 2010. (Lyon, France).

We introduce a new method for globally consistent space-time geometry and motion reconstruction from video capture, which uses a gradual change prior to resolve inconsistencies and faithfully reconstruct the scanned objects.

[Project Page]

High Resolution Passive Facial Performance Capture

D. Bradley, W. Heidrich, T. Popa, A. Sheffer

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2010. (Los Angeles, USA).

We introduce a purely passive facial capture approach that uses only an array of video cameras, but requires no template facial geometry, no special makeup or markers, and no active lighting.

[Project Page]

Binocular Camera Calibration Using Rectification Error

D. Bradley, W. Heidrich

Proceedings of Canadian Conference on Computer and Robot Vision. 2010. (Ottawa, Canada).

We show that we can improve calibrations in a binocular or multi-camera setup by calibrating the cameras in pairs using a rectification error rather than a reprojection error.

[Paper]

Synchronization and Rolling Shutter Compensation for Consumer Video Camera Arrays

D. Bradley, B. Atcheson, I. Ihrke, W. Heidrich

Proceedings of International Workshop on Projector-Camera Systems (PROCAMS). 2009. (Miami, USA).

We present two simple approaches for simultaneously removing rolling shutter distortions and performing temporal synchronization of consumer video cameras.

[Project Page]

Wrinkling Captured Garments Using Space-Time Data-Driven Deformation

T. Popa, Q. Zhou, D. Bradley, V. Kraevoy, H. Fu, A. Sheffer, W. Heidrich

Proceedings of Eurographics. 2009. (Munich, Germany).

In this work we propose a method for reintroducing fine folds into captured garment models using data-driven dynamic wrinkling.

[Project Page]

Augmented Reality on Cloth with Realistic Illumination

D. Bradley, G. Roth, P. Bose

Machine Vision and Applications. 2009. Vol. 20, No. 2, pp 85-92. [Submitted Sept. 2005]

Augmented reality is the concept of inserting

virtual objects into real scenes. We present a method to perform real-time

flexible augmentations on cloth, including real world illumination and shadows.

[Journal Page]

Time-resolved 3D Capture of Non-stationary Gas Flows

B. Atcheson, I. Ihrke, W. Heidrich, A. Tevs, D. Bradley, M. Magnor, H.-P. Seidel

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia). 2008. (Singapore).

We present a time-resolved Schlieren tomography system for capturing full 3D, non-stationary

gas flows on a dense volumetric grid, taking a step in the direction of obtaining measurements of real fluid flows.

[Project Page]

Markerless Garment Capture

D. Bradley, T. Popa, A. Sheffer, W. Heidrich, T. Boubekeur

ACM Transactions on Graphics (Proceedings of SIGGRAPH). 2008. (Los Angeles, USA).

In this paper, we describe a marker-free approach to capturing garment

motion by establishing temporally

coherent parameterizations between incomplete geometries

extracted at each timestep with a multiview stereo algorithm.

[Project Page]

Accurate Multi-View Reconstruction Using Robust Binocular Stereo and Surface Meshing

D. Bradley, T. Boubekeur, W. Heidrich

IEEE Computer Vision and Pattern Recognition (CVPR) 2008. (Anchorage, USA).

A new algorithm for multi-view reconstruction that demonstrates both accuracy and efficiency. Our method is based on robust binocular stereo matching, followed by adaptive point-based filtering and high-quality mesh generation.

[Project Page]

Adaptive Thresholding Using the Integral Image

D. Bradley, G. Roth

ACM Journal of Graphics Tools. 2007. Vol 12, No. 2: 13-21.

We present a technique for real-time adaptive thresholding

using the integral image of the input, which is robust to illumination changes in the image.

[Journal Page]

Tomographic Reconstruction of Transparent Objects

B. Trifonov, D. Bradley, W. Heidrich

Eurographics Symposium on Rendering 2006. (Nicosia, Cyprus)

We present a visible light tomographic reconstruction method for recovering the shape of transparent objects, such as glass. Our setup is simple to implement and accounts for refraction, which can be a problem in visible light tomography.

[Paper]

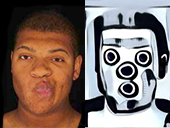

Natural Interaction with Virtual Objects Using Vision-Based Six DOF Sphere Tracking

D. Bradley, G. Roth

Advances in Computer Entertainment Technology 2005. pp. 19-26. (Valencia, Spain).

This paper describes a new tangible user interface system that includes a passive

optical tracking method to determine the six degree-of-freedom

(DOF) pose of a sphere in a real-time video stream.

[ACM Page]

Image-based Navigation in Real Environments Using Panoramas

D. Bradley, A. Brunton, M. Fiala, G. Roth

IEEE International Workshop on Haptic Audio Visual Environments and their Applications 2005. (Ottawa, Canada).

We present a system for virtual navigation in real environments using image-based panorama rendering. A real-time image-based viewer renders individual 360-degree panoramas using graphics hardware acceleration.

[IEEE Page]